In 2018, Samsung started to release Smart TVs that support apps written in .NET. These TVs run on the Tizen operating system which is “an open and flexible operating system built from the ground up to address the needs of all stakeholders of the mobile and connected device ecosystem, including device manufacturers, mobile operators, application developers and independent software vendors (ISVs). Tizen is developed by a community of developers, under open source governance, and is open to all members who wish to participate.” [Tizen.org]

This means that apps can developed in Visual Studio using .NET, tested locally on a TV emulator, tested on a physical TV, and published/distributed on the Samsung Apps TV app store.

In this article you’ll learn how to set up your development environment, create your first app, and see it running on the TV emulator.

Part I - Setting Up Visual Studio for Samsung TV App Development

There are a number of steps to setup Visual Studio.

1.1 Install Java JDK

The first thing to do is install Java, the Tizen tools under the hood require Java JDK 8 to be installed and system environment variables setup correctly.

To do this the hard way, head over to Oracle.com JDK 8 archive page and download the Windows x64 installation. Note the warning before deciding whether or not to go ahead: “WARNING: These older versions of the JRE and JDK are provided to help developers debug issues in older systems. They are not updated with the latest security patches and are not recommended for use in production.” [Oracle.com] Also note “Downloading these releases requires an oracle.com account.” [Oracle.com]

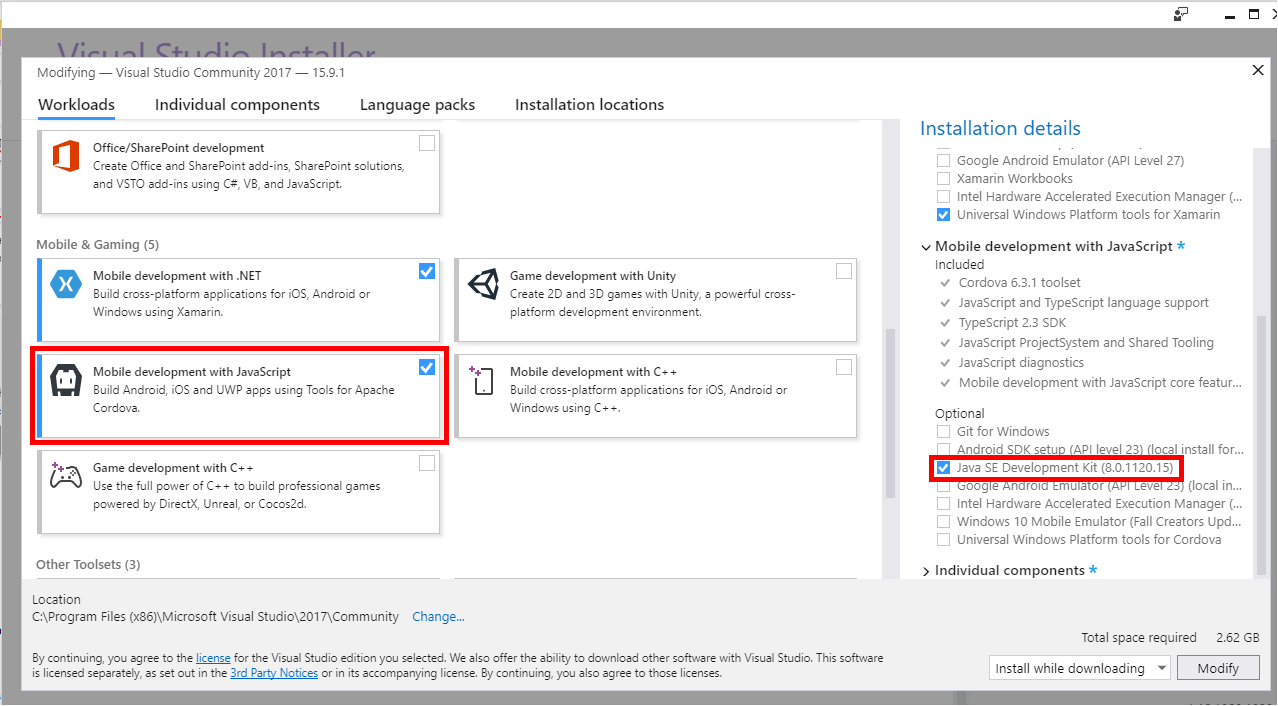

To do it the easy way, open the Visual Studio Installer, check the Mobile Development with JavaScript payload and in the optional section tick the Java SE Development Kit option as shown in the following screenshot. (You may also want to install the Mobile Development with .NET payload as well as you can use Xamarin Forms to develop Samsung TV apps)

Once Java is installed you’ll need to modify system environment variables as follows:

- Add a system variable called JAVA_HOME with a value pointing to the path of the Java install, e.g: C:\Program Files\Java\jdk1.8.0_192

- Add a system variable called JRE_HOME with a value that points to Java JRE directory, e.g: C:\Program Files\Java\jdk1.8.0_192\jre

- Modify the Path variable value and add to the end: %JAVA_HOME%\bin;%JRE_HOME%\bin;”"

1.2 Install Tizen Visual Studio Tools

The next job is to install the Visual Studio Tizen related tools.

First, open Visual Studio and head to Tools –> Extension and Updates. In the online section, search for “Tizen” and download the Visual Studio Tools for Tizen extension. Once downloaded, you’ll need to close Visual Studio and follow the prompts to complete the installation (it may take a little while to download the Tizen tools). Once complete re-open Visual Studio.

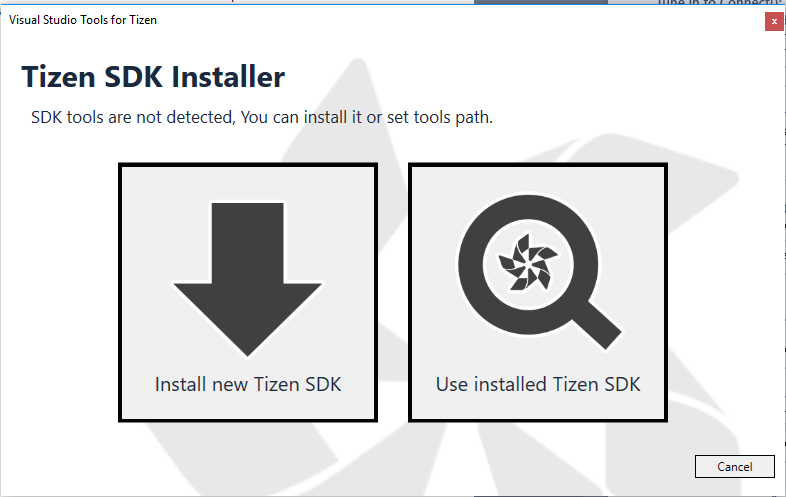

Next, in the Visual Studio menus, head to Tools –> Tizen –> Tizen Package Manager. This will open the Tizen SDK installer. Click the Install new Tizen SDK option as the following screenshot shows:

Choose an install location – you will need to create a new folder yourself – for example C:\TizenSDK

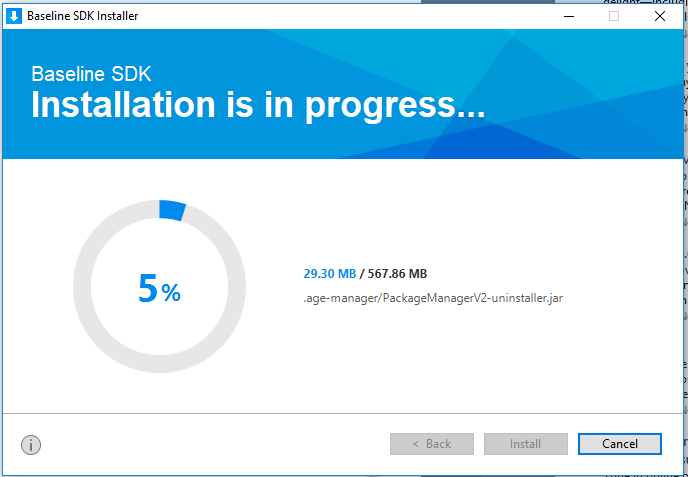

Follow the prompts and you should see the SDK installation proceeding:

You will also be asked to install the Tizen Studio Package Manager, once again follow the prompts. Be patient, the Package Manager install may take some time “Loading package information”.

Once complete, all the dialog boxes should close and you can head back to Visual Studio.

1.3 Install the Samsung Studio TV Extensions

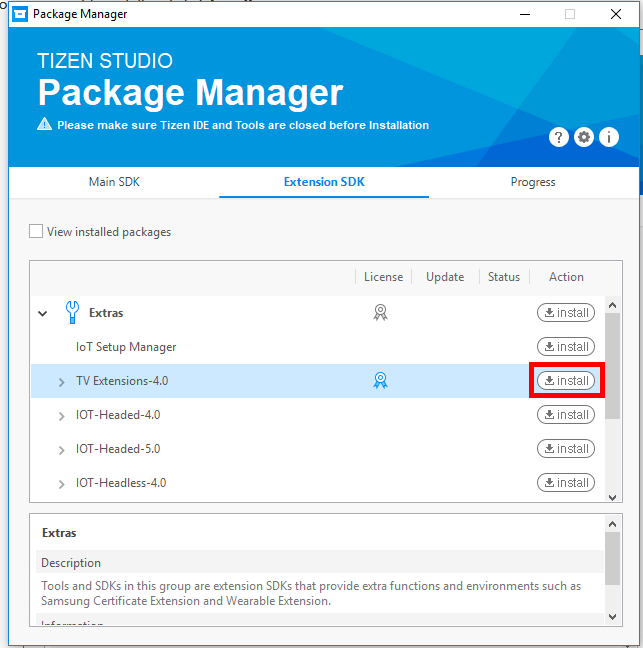

In Visual Studio, head to Tools –> Tizen –> Tizen Package Manager.

Head to the Extension SDK tab and install the TV Extensions-4.0 package:

Once again be patient (the progress bar is near the top of the dialog box).

Once installed, close Package Manager.

1.4 Verify Samsung TV Emulator Installation

Before trying to use the TV emulator check out the requirements (including turning off Hyper V https://developer.tizen.org/development/visual-studio-tools-tizen/installing-visual-studio-tools-tizen

Back in Visual Studio, head to Tools –> Tizen –> Tizen Emulator Manager.

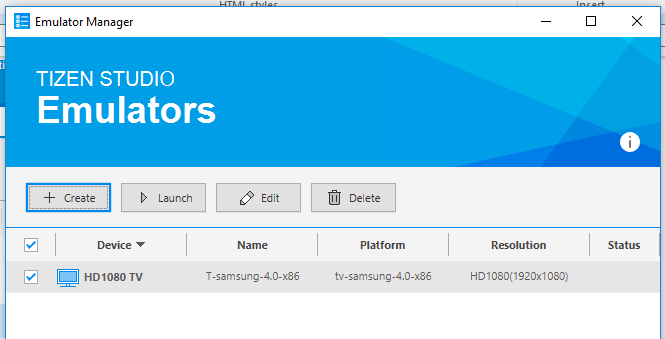

You should see HD 1080 TV in the list of emulators:

1.5 Install Samsung TV .NET App Templates

Head back to Visual Studio’s Tools –> Extensions and Updates menu, once again search online for Tizen, and this time install the Samsung TV .NET App Templates extension. This will give you access to the project templates. You may need to restart Visual Studio for the installation to complete.

Part II – Creating Your First Samsung TV .NET App

2.1 Create a new Samsung TV Project

Re-open Visual Studio and click File –> New Project.

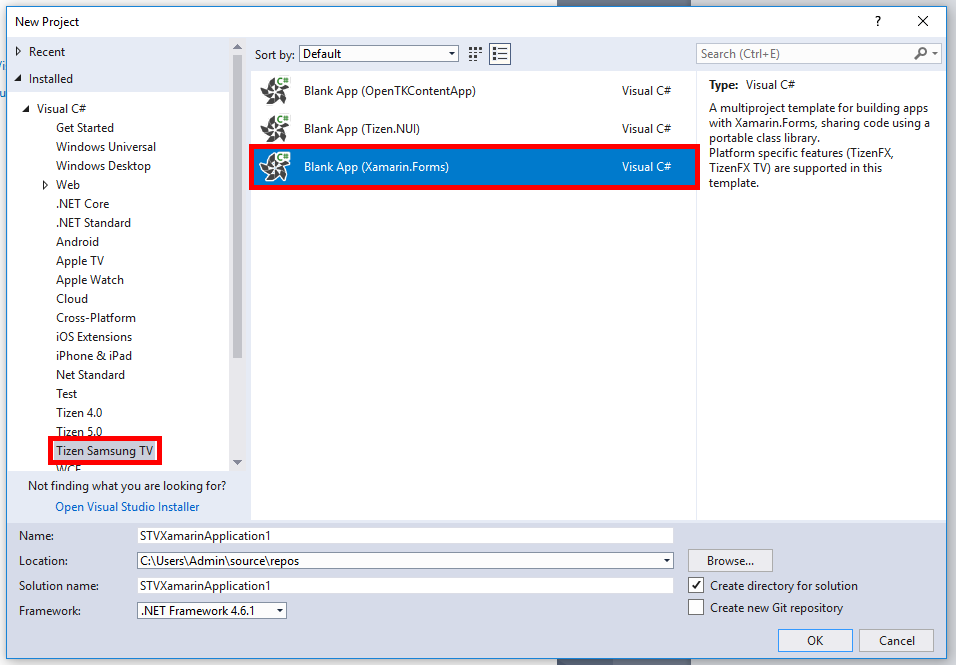

In the Tizen Samsung TV section, choose Blank App (Xamarin.Forms) template:

Click OK. This will create a very basic bare-bones app project that uses Xamarin Forms.

It is a good idea to head to NuGet package manager and update all the packages such as the Xamarin Forms and Tizen SDK packages.

Head into the “STVXamarinApplication1” project that contains the “STVXamarinApplication1.cs” file which in turn contains the App class. Inside the app class you can see the following code:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using Xamarin.Forms;

namespace STVXamarinApplication1

{

public class App : Application

{

public App()

{

// The root page of your application

MainPage = new ContentPage

{

Content = new StackLayout

{

VerticalOptions = LayoutOptions.Center,

Children = {

new Label {

HorizontalTextAlignment = TextAlignment.Center,

Text = "Welcome to Xamarin Forms!"

}

}

}

};

}

protected override void OnStart()

{

// Handle when your app starts

}

protected override void OnSleep()

{

// Handle when your app sleeps

}

protected override void OnResume()

{

// Handle when your app resumes

}

}

}

Modify the line Text = "Welcome to Xamarin Forms!" to be: Text = DateTime.Now.ToString()

Build the solution and check there are no errors.

2.2 Running a .NET App in the Tizen Samsung TV Emulator

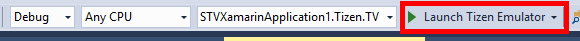

In the Visual Studio tool bar, click Launch Tizen Emulator.

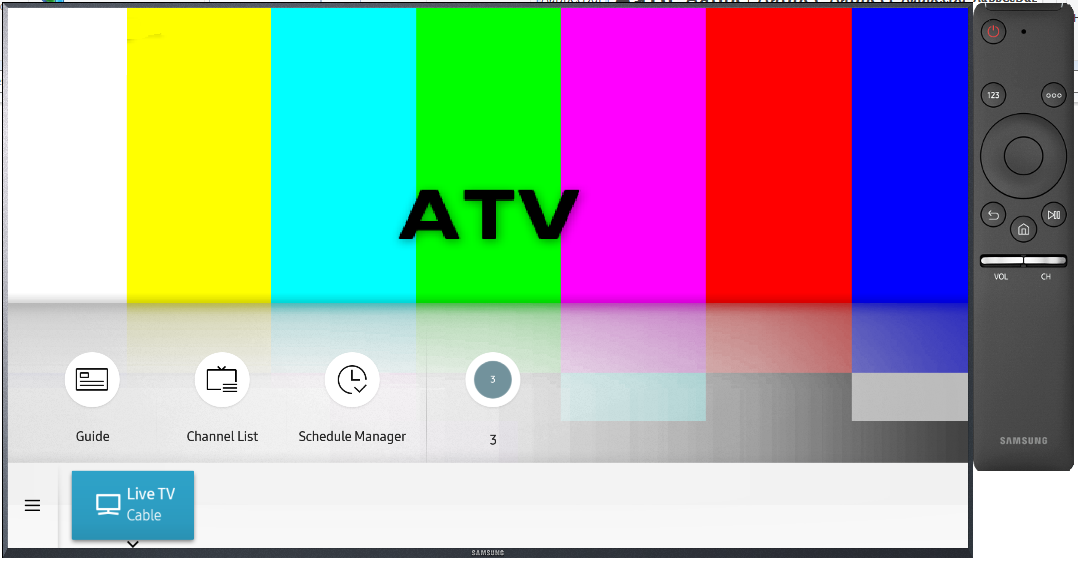

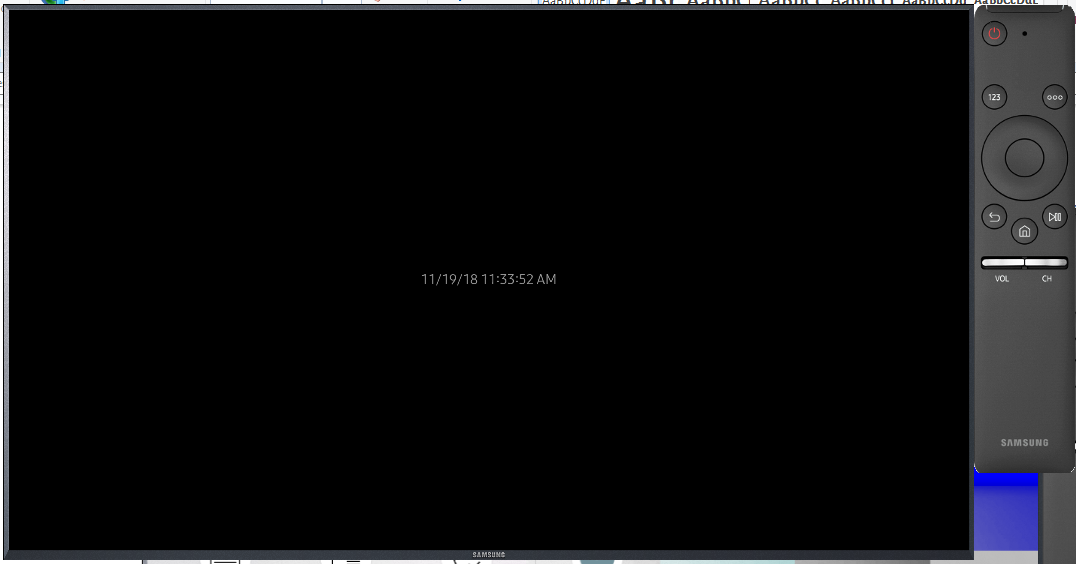

This will open the Emulator Manager, click the Launch button and the TV emulator will load with a simulated remote as shown below:

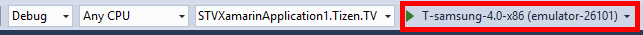

Head back to Visual Studio and click the run button (which should now show something like T-samsung-4.0.x86…):

Once the button is clicked, wait a few moments for the app to be deployed to the emulator. You may have to manually switch back to the emulator if it’s not automatically brought to the front.

You should now see the app running on the emulator and showing the time:

If you want to read more about the Tizen .NET TV Framework, check out the documentation.

If you want to fill in the gaps in your C# knowledge be sure to check out my C# Tips and Traps training course from Pluralsight – get started with a free trial.

SHARE: