UWP apps can take advantage of the Audio Graph API. This allows the creation of audio nodes into an audio signal processing pipeline/graph.

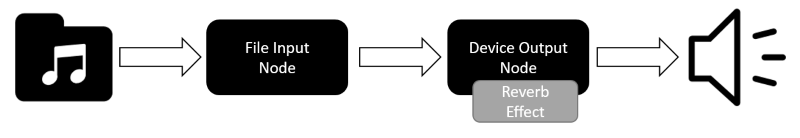

Audio flows from one node to the next with each node performing a defined task and optionally using any applied effects. One of the simplest graphs is to use an AudioFileInputNode to read audio data from a file and an AudioDeviceOutputNode to write it out to the device’s soundcard/headphones.

There are a number of nodes provided out of the box including the AudioDeviceInputNode to read audio from the microphone; the AudioFileOutputNode to output audio to a file, and even AudioFrameInputNode and AudioFrameOutputNode to perform custom audio sample processing as part of the graph. As the name suggests, a graph can branch out and recombine as required; for example sending input to 2 other nodes in parallel and then recombining them into a single output node.

Audio effects can also be added to nodes, such as the pre-supplied echo, reverb, EQ, and limiter. Custom effects can also be written and plugged into the graph by implementing the IAudioEffectDefinition interface.

Getting Started

To start, create an empty UWP Windows 10 project and add the following XAML to the MainPage:

<Page

x:Class="AudioGraphDemo.MainPage"

xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"

xmlns:d="http://schemas.microsoft.com/expression/blend/2008"

xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006"

mc:Ignorable="d">

<Grid Background="{ThemeResource ApplicationPageBackgroundThemeBrush}">

<Button Name="Play" Click="Play_OnClick">Play</Button>

</Grid>

</Page>

In the code behind start by adding the following usings:

using System;

using System.Threading.Tasks;

using Windows.Media.Audio;

using Windows.Storage;

using Windows.Storage.Pickers;

using Windows.UI.Xaml;

using Windows.UI.Xaml.Controls;

And the following fields to hold the audio graph itself and the 2 nodes:

AudioFileInputNode _fileInputNode;

AudioGraph _graph;

AudioDeviceOutputNode _deviceOutputNode;

Next create the button click event handler:

private async void Play_OnClick(object sender, RoutedEventArgs e)

{

await CreateGraph();

await CreateDefaultDeviceOutputNode();

await CreateFileInputNode();

AddReverb();

ConnectNodes();

_graph.Start();

}

We can now add the following methods:

/// <summary>

/// Create an audio graph that can contain nodes

/// </summary>

private async Task CreateGraph()

{

// Specify settings for graph, the AudioRenderCategory helps to optimize audio processing

AudioGraphSettings settings = new AudioGraphSettings(Windows.Media.Render.AudioRenderCategory.Media);

CreateAudioGraphResult result = await AudioGraph.CreateAsync(settings);

if (result.Status != AudioGraphCreationStatus.Success)

{

throw new Exception(result.Status.ToString());

}

_graph = result.Graph;

}

/// <summary>

/// Create a node to output audio data to the default audio device (e.g. soundcard)

/// </summary>

private async Task CreateDefaultDeviceOutputNode()

{

CreateAudioDeviceOutputNodeResult result = await _graph.CreateDeviceOutputNodeAsync();

if (result.Status != AudioDeviceNodeCreationStatus.Success)

{

throw new Exception(result.Status.ToString());

}

_deviceOutputNode = result.DeviceOutputNode;

}

/// <summary>

/// Ask user to pick a file and use the chosen file to create an AudioFileInputNode

/// </summary>

private async Task CreateFileInputNode()

{

FileOpenPicker filePicker = new FileOpenPicker

{

SuggestedStartLocation = PickerLocationId.MusicLibrary,

FileTypeFilter = {".mp3", ".wav"}

};

StorageFile file = await filePicker.PickSingleFileAsync();

// file null check code omitted

CreateAudioFileInputNodeResult result = await _graph.CreateFileInputNodeAsync(file);

if (result.Status != AudioFileNodeCreationStatus.Success)

{

throw new Exception(result.Status.ToString());

}

_fileInputNode = result.FileInputNode;

}

/// <summary>

/// Create an instance of the pre-supplied reverb effect and add it to the output node

/// </summary>

private void AddReverb()

{

ReverbEffectDefinition reverbEffect = new ReverbEffectDefinition(_graph)

{

DecayTime = 1

};

_deviceOutputNode.EffectDefinitions.Add(reverbEffect);

}

/// <summary>

/// Connect all the nodes together to form the graph, in this case we only have 2 nodes

/// </summary>

private void ConnectNodes()

{

_fileInputNode.AddOutgoingConnection(_deviceOutputNode);

}

Now when the app is executed, the user can choose a file, this file is then played with added reverb. Check out the MSDN documentation for more info.

If you want to fill in the gaps in your C# knowledge be sure to check out my C# Tips and Traps training course from Pluralsight – get started with a free trial.

SHARE: