When refactoring a part of an application we can use the existing tests to give a level of confidence that the refactored code still produces the same result, i.e. the existing tests still pass with the new implementations.

A system that has been in production use for some time is likely to have amassed a lot of data that is flowing through the “legacy” code. This means that although the existing tests may still pass, when used in production the new refactored code may produce errors or unexpected results.

It would be helpful as an experiment to run the existing legacy code alongside the new code to see if the results differ, but still continue to use the result of the existing legacy code. Scientist.NET allows exactly this kind of experimentation to take place.

Scientist.NET is a port of the Scientist library for Ruby. It is currently in pre-release and has a NuGet package we can use.

An Example Experiment

Suppose there is an interface as shown in the following code that describes the ability to sum a list if integer values and return the result.

interface ISequenceSummer

{

int Sum(int numberOfTerms);

}

This interface is currently implemented in the legacy code as follows:

class SequenceSummer : ISequenceSummer

{

public int Sum(int numberOfTerms)

{

var terms = new int[numberOfTerms];

// generate sequence of terms

var currentTerm = 0;

for (int i = 0; i < terms.Length; i++)

{

terms[i] = currentTerm;

currentTerm++;

}

// Sum

int sum = 0;

for (int i = 0; i < terms.Length; i++)

{

sum += terms[i];

}

return sum;

}

}

As part of the refactoring of the legacy code, this implementation is to be replaced with a version that utilizes LINQ as shown in the following code:

class SequenceSummerLinq : ISequenceSummer

{

public int Sum(int numberOfTerms)

{

// generate sequence of terms

var terms = Enumerable.Range(0, 5).ToArray();

// sum

return terms.Sum();

}

}

After installing the Scientist.NET NuGet package, an experiment can be created using the following code:

int result;

result = Scientist.Science<int>("sequence sum", experiment =>

{

experiment.Use(() => new SequenceSummer().Sum(5)); // current production method

experiment.Try("Candidate using LINQ", () => new SequenceSummerLinq().Sum(5)); // new proposed production method

}); // return the control value (result from SequenceSummer)

This code will run the .Use(…) code that contains the existing legacy implementation. It will also run the .Try(…) code that contains the new implementation. Scientist.NET will store both results for reporting on and then return the result from the .Use(…) code for use by the rest of the program. This allows any differences to be reported on but without actually changing the implementation of the production code. At some point in the future, if the results of the legacy code (the control) match that of the new code (the candidate), the refactoring can be completed by removing the old implementation (and the experiment code) and simply calling the new implementation.

To get the results of the experiment, a reporter can be written and configured. The following code shows a custom reporter that simply reports to the Console:

public class ConsoleResultPublisher : IResultPublisher

{

public Task Publish<T>(Result<T> result)

{

Console.ForegroundColor = result.Mismatched ? ConsoleColor.Red : ConsoleColor.Green;

Console.WriteLine($"Experiment name '{result.ExperimentName}'");

Console.WriteLine($"Result: {(result.Matched ? "Control value MATCHED candidate value" : "Control value DID NOT MATCH candidate value")}");

Console.WriteLine($"Control value: {result.Control.Value}");

Console.WriteLine($"Control execution duration: {result.Control.Duration}");

foreach (var observation in result.Candidates)

{

Console.WriteLine($"Candidate name: {observation.Name}");

Console.WriteLine($"Candidate value: {observation.Value}");

Console.WriteLine($"Candidate execution duration: {observation.Duration}");

}

if (result.Mismatched)

{

// saved mismatched experiments to event log, file, database, etc for alerting/comparison

}

Console.ForegroundColor = ConsoleColor.White;

return Task.FromResult(0);

}

}

To plug this in, before the experiment code is executed:

Scientist.ResultPublisher = new ConsoleResultPublisher();

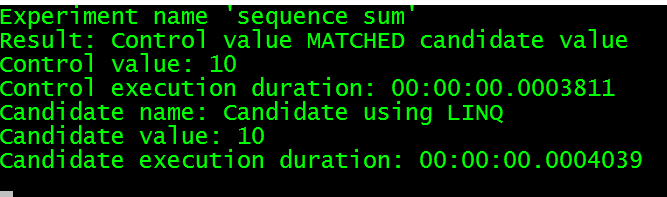

The output of the experiment (and the custom reporter) look like the following screenshot:

To learn more about Scientist.NET check out my Pluralsight course: Testing C# Code in Production with Scientist.NET.

You can start watching with a Pluralsight free trial.

SHARE: